[ 2016-October-30 09:27 ]

I work at Bluecore where, like any growing application, we periodically run into interesting performance problems. Most of our service runs on App Engine, which has pretty good automatic scaling. That causes most performance issues to show up as large bills, rather than production failures. This is good news for our customers, but bad news for Bluecore, so we keep a close eye on our costs. While investigating a cost increase, we discovered that the Datastore was taking nearly a second to return 20 objects, instead of the normal 100 ms. In this article, I'll talk about how we used traces and logging to find the problem, then monkey patched Google's code to fix it. I think there are some generally applicable lessons, but the details may only be interesting to the unfortunately small number of people who use App Engine. TL;DR: App Engine's older Python Datastore library is very slow when serializing big objects. Traces helped make the problems visible, but logs pinpointed the issue.

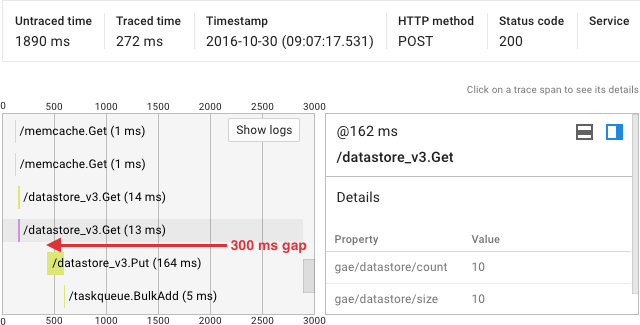

We store a lot of data in the Google Cloud Datastore, which is a slightly weird key/value store with lots of features. For the purposes of this discussion, all that matters is that you can look up objects by key. We had implemented a new feature that read a batch of 20 objects using their key, which we expected to take no more than around 100 ms. Unfortunately, our monitoring showed this new feature was very slow, sometimes taking more than a second. App Engine traces a fraction of all requests, so we found a slow request and looked at the trace. We saw something like the following:

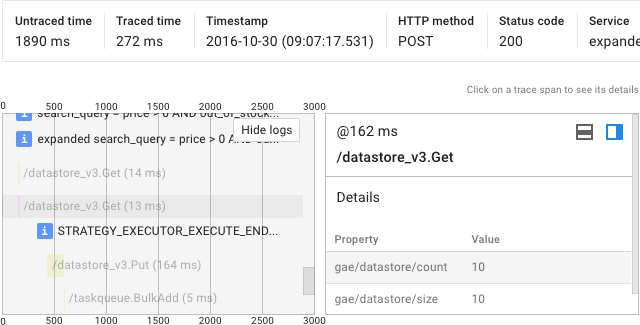

This shows a backend task taking around two seconds to return a result. The selected Datastore get took 13 ms, and the details on the right shows it returned 10 entities. Around 300 ms later the application then does a Datastore put. In the worst cases, we saw a gap after a get of up to a second. So what the heck was happening? To get more detail, the trace tool can also show us our application logs:

The gap between the Datastore get and the log message is about 250 ms, so we still are missing a lot of time. However, the requests combined with the log messages told us approximately what chunk of code was responsible. To track it down further, we logged the execution time of small pieces of the suspicious code. It turns out that the db.get API call was taking basically all the time. This was confusing at first: the trace showed the Datastore get returning after 100 ms, but our logs showed the function call taking a second. I suspected this meant that the Datastore was giving our application the data in the ~100 ms reported in the trace, but whatever the Python library was doing after that was slow.

While App Engine does have some private implementation pieces, the bulk of the code appears to be what they provide with the development kit. I walked through the code to see if I could find anything obviously slow. It turns out the older db library we use is built using an undocumented API called datastore.GetAsync. This returns an Entity object, that the db library then converts to the application's custom Model class. Since we were now down to a single function call, I wrote a microbenchmark which thankfully demonstrated the problem, and showed that datastore.GetAsync was about 2X faster. We tried deploying that fix.

While this did run significantly faster, there were still cases that were extremely slow. We logged the object keys for slow requests, then investigated using the microbenchmark. We found that the slow objects had more than 100 attributes, and that more attributes made deserialization slower. Looking more carefully at the implementation, it became clear why. The code converts all attributes from bytes to Python objects. However, for our feature, we only needed about four or five attributes, so this was wasted effort. Unfortunately, there was no easy API that skipped this step.

You can't change the core libraries on App Engine, since Google provides them for you. However, this is Python and everything is mutable. Eventually we found a place where we could monkey patch their internal API to skip the conversion, and just return the "raw" data from the Datastore. The microbenchmark showed that solved the performance problem. To get the attributes we needed, we wrote a wrapper that converted them lazily. This was around 4-5X faster than the original API, and allowed us to reach our performance target.

Lessons

- Per-request logs are a performance tool, but they don't need to be fancy: The App Engine/Google Cloud tracing tool is pretty sophisticated, but in this case all we needed was timestamped messages from a single request. However the visualization does make gaps obvious. It is also helpful that the trace captures metadata about each App Engine API call by default (e.g. the number of entities returned). I think the general lesson is that if you are building core APIs, include some way to log a summary of each operation. For more examples of how to use tracing tools for performance, see Dan Luu's summary of a Dick Sites talk.

- Don't layer abstractions that do the same thing: Usually abstractions are good, since they provide a simpler interface and hide a lot complexity. In this case, the original App Engine db API is designed to provide a friendly interface to the datastore. However, it is built on top of another API that had the exact same goal! That API is built on the lower-level Datastore protocol, which is definitely a bit ugly and un-Pythonic. The result is there are two API layers that do the same thing, so we are doing the same work twice. The second datastore API, ndb, recognized this and cuts out the middle man, and as a result has better performance.

- Reproduce hard bugs with the smallest amount of code possible: Writing a microbenchmark that reproduced the problem was critical for figuring out this problem. Without it, the iteration cycle between generating a theory about what could be causing the issue and testing it would have been way too slow, particularly since this was only happening in our production environment. Don't be afraid to write more code to help isolate and find your bug.

Performance notes for App Engine users

If you use App Engine with Python, I've summarized some benchmark results from my microbenchmark. If you want to re-run these tests yourself, I've published the microbenchmark, as well as the LazyEntity class and monkey patch that does lazy deserialization. The worst part is that after I did all this work, I found that Matt Faus found the same problem in December 2014. His solution is similar, so you may want to evaluate both if you run into this issue.

- db deserialization is slower than ndb: ndb converts from the Datastore protocol buffer response to your Model, while db first converts it to an Entity.

- db.Expando is twice as slow compared to db.Model, but for ndb it makes no difference: db.Expando makes a second conversion pass over the protocol buffer attributes. For ndb they only make one.

- The Standard Environment has very fast protocol buffer serialization compared to the Flexible Environment: I suspect native code is used in the Standard Environment, while pure Python is used in the Flexible Envirnoment.

For even more details, see the notes on my datastore performance microbenchmark.